[AI News] LLMs Hit a Wall, Enterprise AI Boom, ChatGPT’s New Desktop Power, and Open-Source AlphaFold

The Scaling Wall: Are We Near the End of Better AI Models?

Happy Sunday fellow AI enthusiasts,

We’re used to seeing new, better large language models arrive almost monthly. The belief behind this rapid progress has been simple: more data and more compute lead to better AI. But reports from Reuters, Bloomberg, and The Information this week suggest we may be hitting limits to this approach.

Big AI labs like OpenAI and Anthropic say otherwise. Sam Altman, OpenAI’s CEO, is optimistic, even suggesting AGI could be just "a thousand days away." Anthropic is equally confident, projecting AGI by 2027.

But let’s assume scaling limits are real—is that a problem? Probably not.

Here’s my thinking:

There’s still plenty of value left to unlock from current models. We’re just scratching the surface of integrating AI deeply into real-world systems, particularly in enterprise settings. For example, OpenAI and Anthropic have recently focused on making their models more practical by integrating them into tools like VSCode or improving computer interactions, not just building bigger models.

Moreover, bigger might not be the future. Instead of one massive model, the next wave of AI could come from a collection of smaller, specialized models working together (i.e. agentic workflows)—like Replit’s AI agent, which coordinates off-the-shelf models to achieve impressive results.

In short, even if scaling larger models slows, the opportunities for today's AI are immense. We’ve only just begun integrating these capabilities into industries in meaningful ways. Whether through smarter integrations, more practical applications, or specialized model collaborations, the AI journey is far from over—it’s simply evolving into its next exciting phase.

Hope you have a great week!

-Manny

[RESEARCH]

Databricks State of Data + AI Report Shows Massive Surge in Enterprise AI Adoption

Databricks' latest State of Data + AI report reveals a dramatic shift in enterprise AI implementation, with organizations becoming significantly more efficient at deploying AI models and heavily investing in LLM customization. The report indicates a clear move from experimentation to production, with regulated industries surprisingly leading the charge in AI adoption.

Highlights:

Organizations achieved an 11x increase in AI models deployed to production compared to the previous year, with companies becoming 3x more efficient at deployment

76% of companies are choosing open-source LLMs, often alongside proprietary models, with a preference for smaller models (13B parameters or fewer)

Vector database usage grew 377% year-over-year as companies rush to implement Retrieval Augmented Generation (RAG) with their private data

Natural Language Processing (NLP) emerged as the most used and fastest-growing machine learning application

Financial Services leads GPU usage for LLMs with 88% growth over 6 months

Healthcare & Life Sciences and Manufacturing & Automotive show strong adoption of foundation model APIs

LangChain became a top 4 tool within just one year of integration

Meta Llama 3 gained 39% market share within just 4 weeks of launch

Companies are increasingly balancing trade-offs between cost, performance, and latency in LLM implementation

Highly regulated industries are surprisingly leading AI adoption, particularly in customization and governance

Key Takeaways: The report indicates a significant maturation in enterprise AI adoption, with organizations moving beyond experimental phases to practical implementation. The strong preference for RAG and open-source tools suggests companies are prioritizing customization and control over their AI solutions. As regulated industries lead this charge, it signals growing confidence in AI governance and security measures, potentially paving the way for wider adoption across all sectors. → Read the full report here.

Quick AI Hits ⚡️

ChatGPT Integrates with macOS Desktop Apps: Supports VS Code, Xcode, Terminal, iTerm2, enhancing coding assistance.

Stripe Launches New Agent Toolkit: Enables payment integration into AI workflows, enhancing LLM-powered agents.

OpenAI's Copyright Lawsuit Dismissed: Judge dismisses suit from Raw Story and AlterNet, citing lack of harm but allowing refile.

Brookings Explores AI's Impact on Work: Generative AI, the American worker, and the future of work, discussing AI's role in shaping future employment.

NVIDIA Introduces New AI Robotics Tools: Isaac Lab and Project GR00T launched, aiming to advance robot learning and humanoid development.

U.S. Halts Advanced Chip Exports to China: Commerce Department orders TSMC to stop exports, affecting AI chip supply.

The Washington Post Launches 'Ask The Post AI': New generative AI search tool unveiled, offering direct answers from archives.

AssemblyAI Introduces Universal-2 Model: New speech-to-text model enhances accuracy, setting a new industry standard.

Majority of Leaders Not Upskilling for AI: New BCG report reveals only 6% actively upskill, with 90% remaining observers.

ChatGPT's Impact on Chegg: How ChatGPT brought down Chegg, affecting the online education giant.

AlphaFold3 Goes Open-Source: DeepMind releases AlphaFold3 code, accelerating protein modeling research.

Alibaba Cloud's Qwen Releases AI Coding Suite: 32B model rivals GPT-4 and Claude, supporting over 40 programming languages.

OpenAI to Release Autonomous Agent Platform: AI agent to perform tasks independently, set for January release.

Microsoft Shares 200 AI Adoption Examples: From Microsoft Copilot, showcasing AI integration across industries.

ICU Nurse Voices AI Concerns in Healthcare: Fears over loss of intuition and connection, as AI prioritizes efficiency.

Unitree Releases Open-Source G1 Dataset: Aids embodied AI and humanoid robotics, accelerating development.

Anthropic Introduces 'Prompt Autocorrect': Claude now refines user prompts automatically, enhancing multi-model optimization.

Gartner Predicts Power Shortages for AI Data Centers: 40% may face shortages by 2027, due to generative AI's energy demands.

Nous Research Launches Forge Reasoning API Beta: Enhances smaller models with advanced reasoning, rivaling larger AI systems.

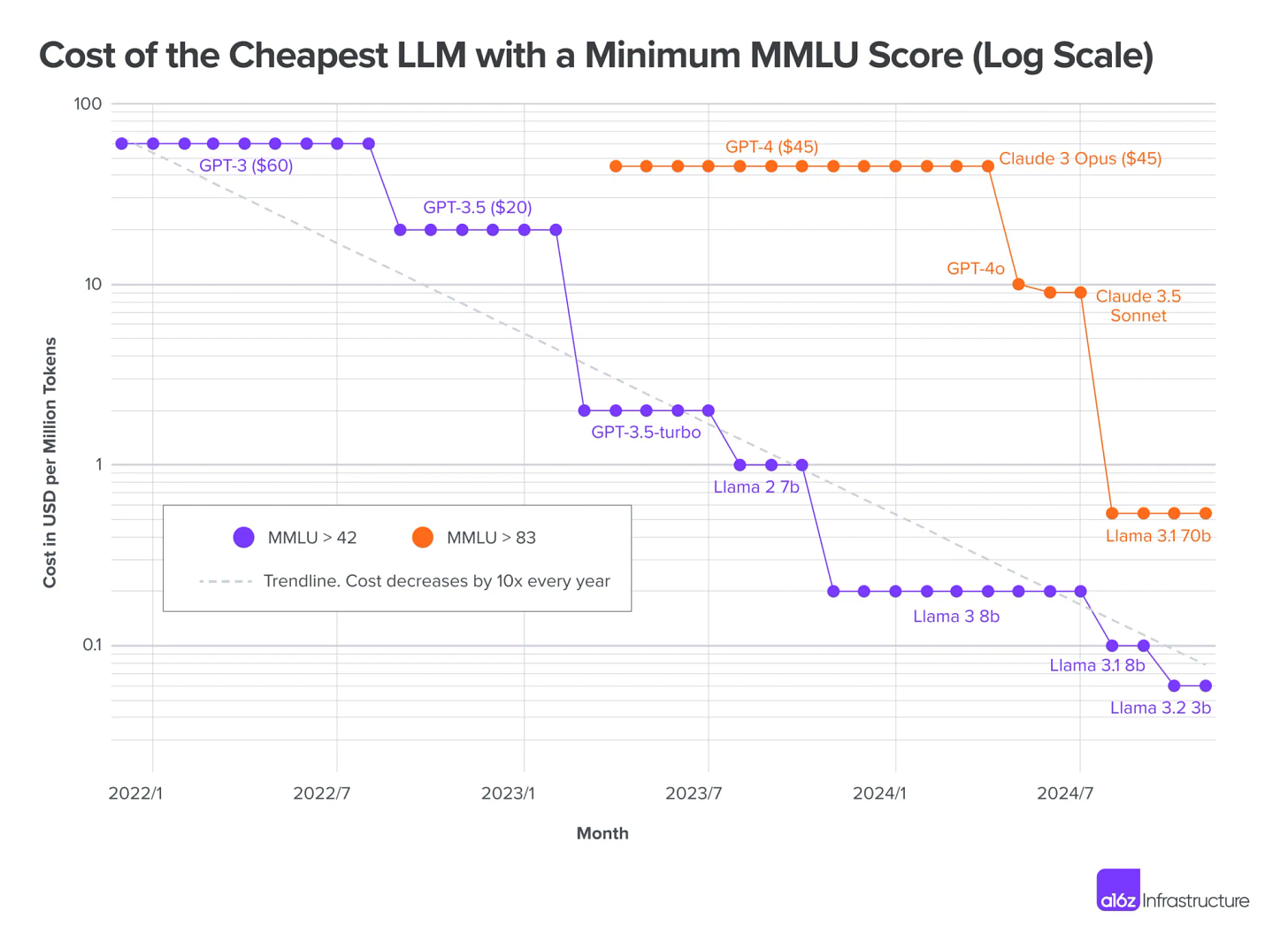

Chart of the Week

The cost of large language model (LLM) inference has decreased dramatically, dropping 10x annually since GPT-3’s public debut in 2021. This trend, known as “LLMflation,” is fueled by advancements in GPUs, model quantization, software optimizations, and open-source competition, unlocking new applications and transforming the AI landscape across industries. → Read the full article here.

Content I Liked

In a recent Lex Fridman podcast, Anthropic’s Dario Amade, Amanda Ascal, and Chris Olah discussed the evolution of large language models (LLMs), scaling laws, and responsible AI development. They explored the importance of scaling for capturing complex patterns, limitations due to data and compute, and Anthropic’s safety-oriented research. The conversation highlighted Claude’s advancements, including varied model sizes and computer integration, while addressing risks like misuse and autonomy as models grow more capable. They emphasized the art of prompt engineering, alignment through character traits, and a “race to the top” philosophy for ethical AI development. Amade concluded by envisioning AGI’s potential to drive breakthroughs in science and medicine, creating deeper meaning in an automated world. → Listen to the full podcast here.

AI Fun

Test time compute explained! 😺🤣

Source: @karpathy

That’s all from me. Thank you, and I’ll chat with you next week!

-Manny