[AI News] AI in 2025: What’s Next? DeepSeek V3, 2024 Trends, and the Future of Medicine and Education

AI in 2024 and What to Watch for in 2025

The world of large language models (LLMs) exploded this year, giving us way more options than ever before. Remember when OpenAI was basically the only game in town? Not anymore. Google is back, Anthropic is better than ever, and Amazon has "entered the chat" with its first LLM. Meanwhile, Meta, Mistral, and DeepSeek are making waves in the open-source space. There’s a ton of competition now, and that’s made everything way more exciting.

We’ve also seen businesses jump on the LLM bandwagon like never before. Companies are finding ways to work AI into their daily grind—boosting productivity and unlocking all kinds of new opportunities. And let’s not forget about video generation. Early in the year, everyone was buzzing about Sora, and now it’s launched with plenty of other competitors following suit. The whole generative video space is blowing up.

So, what’s next in 2025? Here are a few things to keep an eye on:

Agentic Workflows: Expect LLMs to tackle more complex tasks on their own, making everything smoother and more efficient.

Shifting LLM Landscape: How will OpenAI handle its move to being for-profit? And what about Elon's xAI?

AI and Robotics: OpenAI’s investments in companies like Figure are hinting at some cool stuff—think AI-powered robots helping out in factories or even around the house.

Generative Video Tech: The tools are just getting better and better, which will make entertainment more fun but also raise big questions about creativity and ethics.

Open Source from China: Firms like DeepSeek are stepping up big time, and that’s going to shake things up globally, especially when it comes to US-China relations.

US AI Policy: With a new Trump administration that’s all about AI and tech, we might see some big moves to ramp up adoption here in the States.

2025 is shaping up to be just as exciting and unpredictable as 2024. I’m here for it, and I’ll keep bringing you the latest updates and insights. Let’s see where this journey takes us next!

Cheers,

Manny

[OPEN-SOURCE]

DeepSeek V3: Where Efficiency Meets Performance in Open-Source AI

The Recap: The release of DeepSeek V3 by the Chinese AI company DeepSeek marks a significant advancement in open-source Large Language Model (LLM) development, challenging the performance of leading U.S. models with greater efficiency and accessibility.

Highlights:

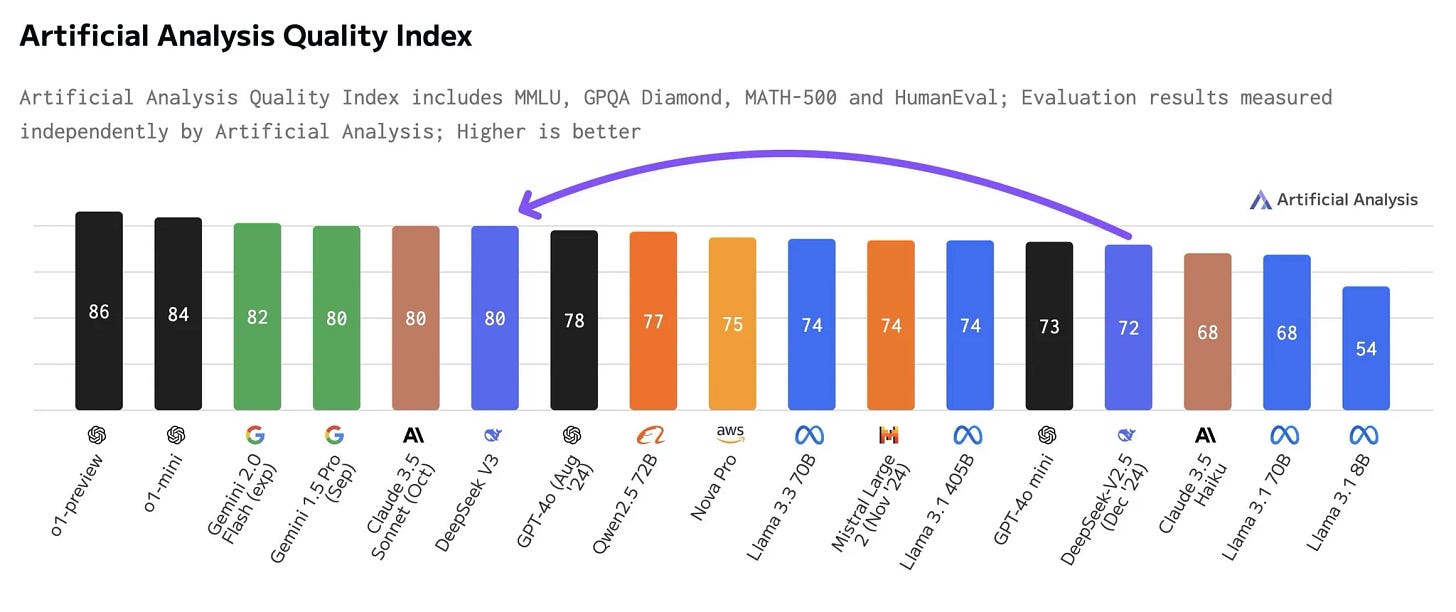

Performance Benchmark: DeepSeek V3 leads the open-source AI sector, surpassing models like OpenAI's GPT-4o and closely approaching Anthropic's Claude 3.5 Sonnet, establishing itself as China's AI leader.

Model Architecture: Utilizes an innovative Mixture of Experts (MoE) architecture with 671 billion total parameters, of which 37 billion are active, significantly increasing its capacity over previous versions.

Training Efficiency: Trained on 14.8 trillion tokens in just 2.788 million NVIDIA H800 GPU hours, showcasing remarkable efficiency and cost-effectiveness, with a total training cost of approximately $5.6M.

Speed and Efficiency: Achieves an output speed of 89 tokens per second, a 4x increase from DeepSeek V2.5, demonstrating substantial improvements in inference optimization.

Open-Source Movement: Released under an open-source license, DeepSeek V3 promotes the democratization of AI, allowing developers to modify and use it for a wide range of applications, including commercial ones.

Cost-Effectiveness: Pricing remains competitive, especially with promotional rates until early February, offering significant savings compared to other frontier models.

Multilingual Capabilities: Shows strong performance across various languages, outscoring other open weights models, which is crucial for global applicability.

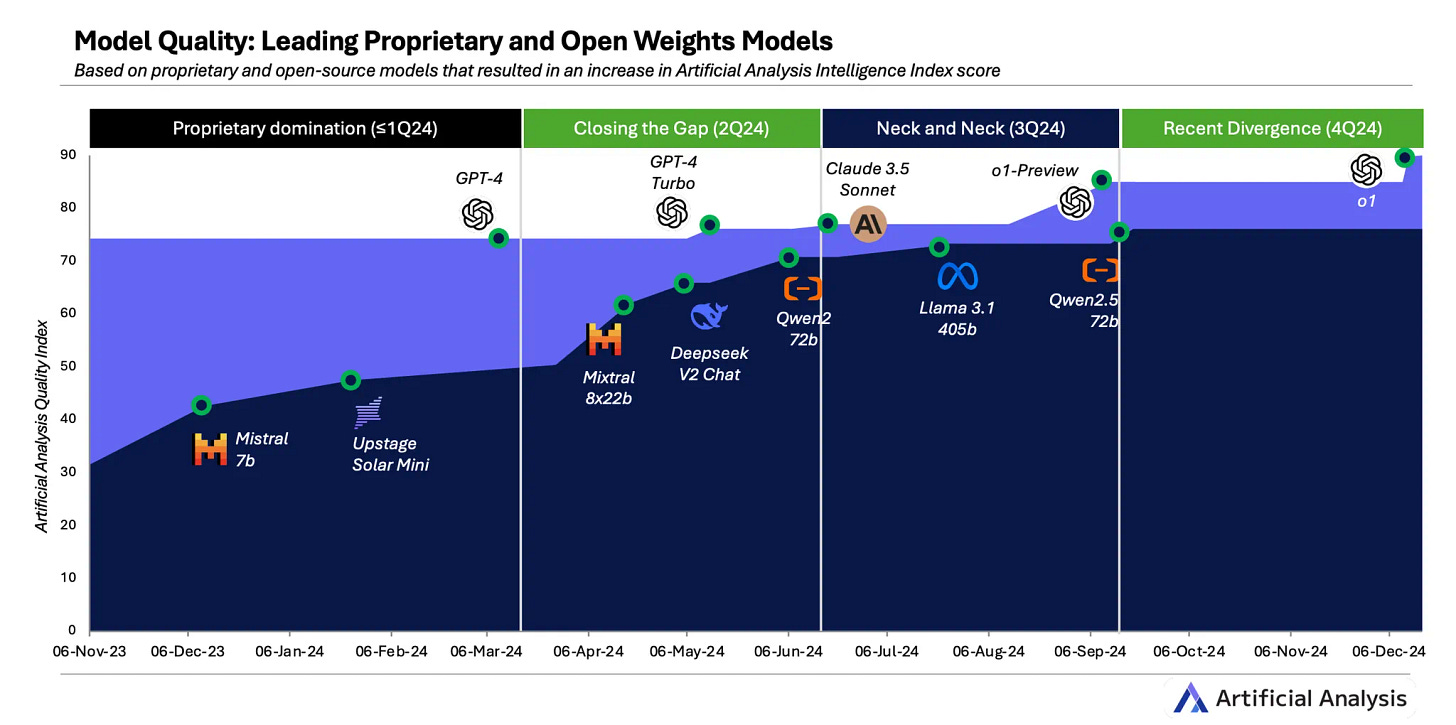

Global Impact: DeepSeek-V3's development showcases China's growing AI prowess and efficiency, potentially challenging US technological dominance and influencing global AI strategy, security, and international tech cooperation amidst ongoing US-China tech rivalry.

Key Takeaways: DeepSeek V3's release signifies a pivotal moment in LLM development, emphasizing efficiency, open-source availability, and performance. It not only challenges the dominance of U.S.-based models but also sets a precedent for how advanced AI can be developed with fewer resources. This model's capabilities in coding, reasoning, and multilingual performance highlight a shift towards more accessible and versatile AI solutions, potentially influencing future trends in AI research and development across the globe. The open-source nature of DeepSeek V3 encourages broader participation in AI innovation, potentially democratizing access to cutting-edge technology. → Read more here.

[AI TRENDS]

LangChain's 2024 State of AI Report: Key Trends in LLM Development

The Recap: LangChain's year-end analysis reveals significant shifts in how developers are building and deploying Large Language Model (LLM) applications, with trends showing increased complexity in workflows and growing adoption of AI agents.

Highlights:

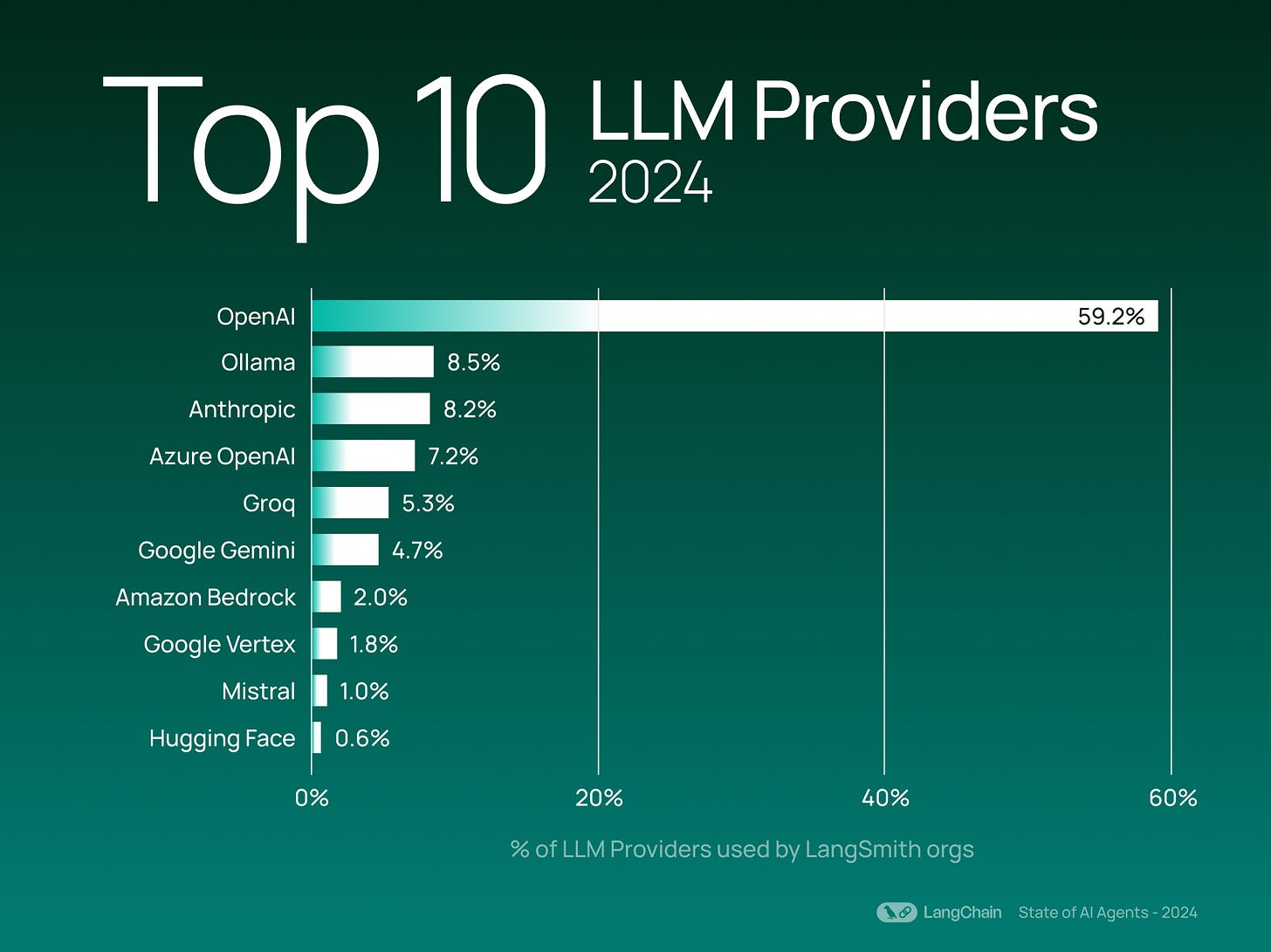

OpenAI remains the dominant LLM provider, used 6x more than runner-up Ollama

Open-source model providers (Ollama, Mistral, Hugging Face) now represent 20% of top provider usage

Chroma and FAISS continue as the most popular vector stores for retrieval operations

43% of LangSmith organizations are now using LangGraph for AI agent development

Tool calls in traces increased dramatically from 0.5% in 2023 to 21.9% in 2024

Average steps per trace more than doubled from 2.8 to 7.7, showing increased workflow complexity

Python remains the primary development language (84.7%) with JavaScript growing 3x year-over-year

Testing focuses primarily on relevance, correctness, exact match, and helpfulness metrics

Human feedback annotation runs grew 18x over the year

Key Takeaways: The AI development landscape is evolving toward more complex, multi-step applications with increased emphasis on AI agents and automated workflows. While OpenAI maintains market dominance, there's growing interest in open-source alternatives and flexible deployment options. Developers are building more sophisticated applications while trying to optimize LLM usage, showing a mature approach to balancing capability with efficiency. The trends suggest a move toward more autonomous and complex AI systems, with increased focus on testing and quality assurance. → Read the full report here.

[AI TRENDS]

2024 AI Industry Analysis Report - Key Highlights

The Recap: The report analyzes major developments in AI throughout 2024, covering language models, image generation, video generation, and speech technologies.

Key Findings:

Language Models:

Multiple labs caught up to GPT-4's capabilities

Open-source models approached and surpassed GPT-4's performance level

The first models pushing beyond GPT-4's intelligence emerged late in 2024

Model inference pricing fell dramatically across all tiers

Context windows increased significantly to 128k tokens as the new norm

Geographic Distribution:

US dominates the AI frontier

China holds clear second place

Only a handful of other countries demonstrated frontier-class capabilities

Market Dynamics:

Developer demand concentrated on top AI labs (OpenAI 83%, Meta 49%, Anthropic 46%)

Most developers using multiple models in their applications

~75% accessing models via hosted serverless endpoints

Model quality and price are primary decision drivers

Multimodal Progress:

Image generation saw major improvements in photorealism and text rendering

Video generation became more competitive after OpenAI's Sora announcement

Text-to-speech achieved new quality milestones with transformer-based models

Speech-to-text costs dropped dramatically while speed increased significantly

Key Takeaways: The AI field saw rapid advancement across all domains in 2024, with increased competition among providers, falling prices, and improving capabilities. Open-source alternatives gained ground while maintaining a healthy ecosystem of both proprietary and open models. → Read the full report here.

Quick AI Headlines ⚡

AI Model Tests Diagnostic Limits: Not Ready to Replace Doctors Yet: New Scientist explores recent advancements in AI, noting that OpenAI's o1-preview model can outperform human doctors in some diagnostic tasks. Harvard Medical School's Adam Rodman led tests on this model, highlighting its potential to enhance medical treatment. Despite these advances, the article emphasizes the complexity and challenges of fully replacing human doctors with AI, suggesting a supplementary role for AI in the healthcare space rather than a complete takeover.

China's AI Closing the Gap with U.S. Despite Chip Restrictions: WSJ reports that Chinese AI startups are quickly advancing, even without access to cutting-edge U.S. chips. Companies like DeepSeek and Moonshot AI are gaining ground, using strategies like reinforcement learning and the "mixture of experts" approach to optimize AI performance. Although direct comparisons to models like OpenAI’s o1 are challenging, Chinese developers are impressing some U.S. experts. This progress highlights China's resilience and potential to innovate despite technological barriers.

Arizona Approves AI-Only Online Charter School: Arizona State Board for Charter Schools sanctions the Unbound Academy, an AI-driven online charter school. This pioneering model aims to address teacher shortages by offering personalized learning through AI technology but ignites debate over its social and ethical implications. Critics express concerns about reduced human interaction and potential biases, while advocates highlight customization benefits. The move marks a significant development in integrating AI into education, presenting challenges in oversight, accessibility, and maintaining educational equity.

Ukraine's Drone Footage Fuels AI Development in Warfare: Reuters reports Ukraine harnesses 2 million hours of drone footage to train AI models for battlefield decision-making, highlighting its critical role in the ongoing conflict with Russia. Oleksandr Dmitriev, founder of OCHI, notes that this vast dataset from over 15,000 drone crews aids in combat tactics, target identification, and effectiveness assessment. With AI increasingly used by both Ukraine and Russia, this data's potential application shapes future warfare strategies and presents key political and ethical questions about AI in military contexts.

Palantir and Anduril Form Consortium to Compete for Defense Contracts: TechCrunch reveals that Palantir and Anduril are collaborating to create a tech consortium targeting Pentagon contracts, potentially challenging established defense giants like Lockheed Martin. By enlisting SpaceX, OpenAI, and other tech firms, the initiative aims to introduce innovative defense solutions and technologies. Initial partnerships are expected to be announced in January, marking a significant shift in the competitive landscape of defense contracting.

Apptronik and Google DeepMind Join Forces to Enhance Humanoid Robotics: The Robot Report announces a strategic partnership between Apptronik and Google DeepMind to advance humanoid robots using AI. The collaboration aims to integrate Apptronik's robotics platform with DeepMind's AI expertise to develop intelligent and versatile humanoids, like the Apollo robot, which are designed to operate safely in dynamic environments. This partnership marks a significant step in creating robots capable of addressing global challenges across various industries.

AI's Role in Elections: Unexpected Under-the-Radar Influence: Wired analyzes the anticipated yet understated role of AI in 2024's global elections. While initial concerns focused on misleading deepfakes, experts reveal AI's subtle but significant use in election operations. Tools like ChatGPT aided in drafting campaign strategies and speeches, while AI translation technologies helped broaden audience reach. Despite fewer deepfakes than feared, AI's behind-the-scenes applications like email and ad copywriting suggest an evolving influence, especially in supporting small-scale campaigns and ensuring broader engagement, hinting at AI's growing electoral impact.

OpenAI Expands Robotics Investments with Figure AI and Physical Intelligence: OpenAI's strategic moves highlight its active role in advancing robotics through key investments. Figure AI, backed by $675 million from investors like Nvidia and Microsoft, focuses on humanoid robots for logistics. Physical Intelligence, valued at $2.4 billion, aims to develop universal AI for diverse robotic applications. OpenAI's involvement signifies its broader interest in integrating AI into physical systems, reflecting a renewed commitment to bridging AI capabilities with real-world demands.

xAI Launches Standalone iOS App for Grok Chatbot: TechCrunch reports that xAI, founded by Elon Musk, is testing a standalone iOS app for its Grok chatbot, expanding its reach beyond previous availability to X users only. Now live in beta across several countries, the app offers features like real-time web access, text rewriting, and image generation. This initiative aims to enhance user interaction with Grok, providing a maximally truthful and useful AI-powered assistant available on mobile platforms.

Chart of the Week 📊

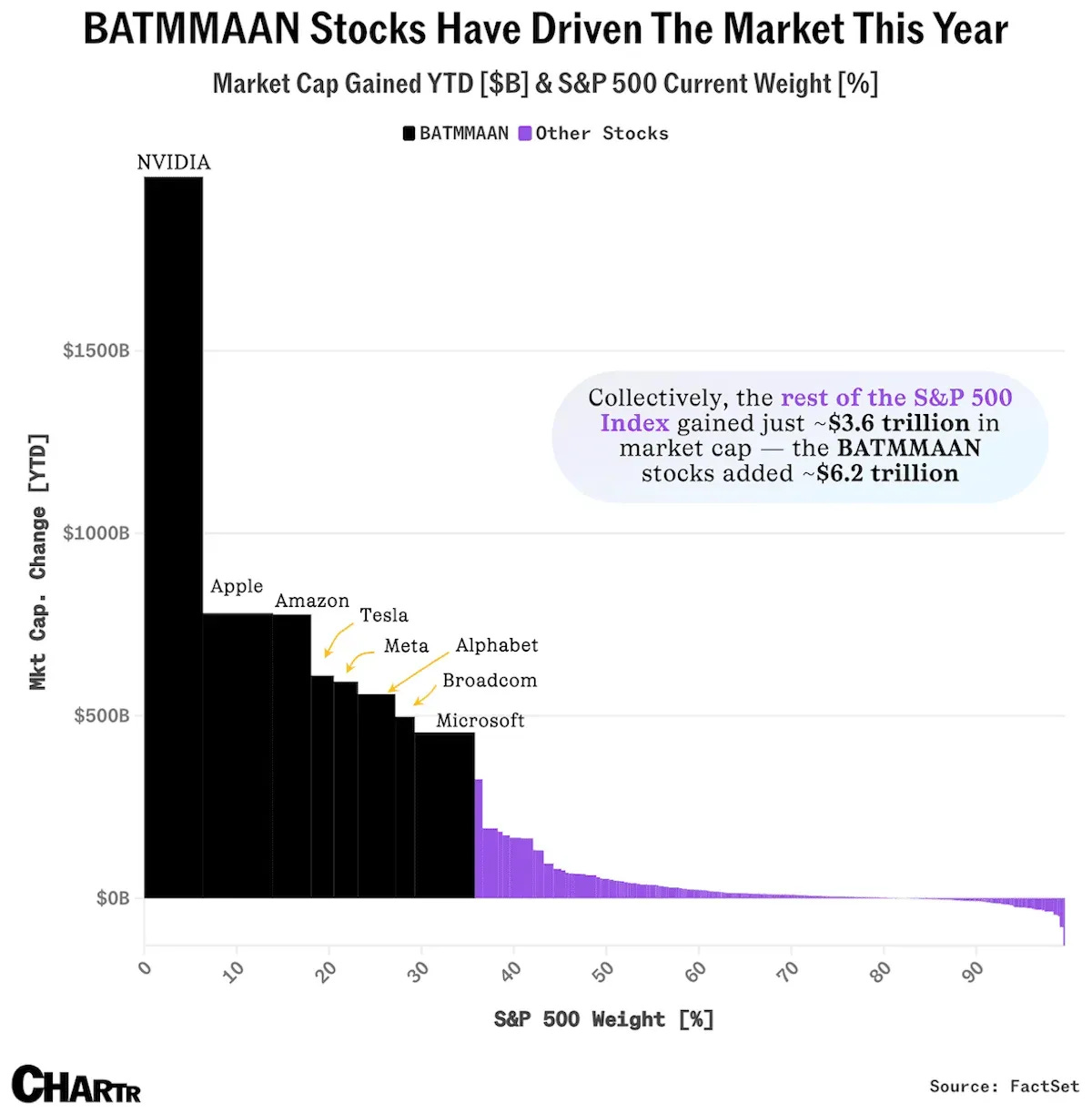

2024 has been a monumental year for NVIDIA, carrying the bulk of the load for U.S. stock performance alongside a handful of other tech giants. With the AI hype fueling its rise, NVIDIA has solidified itself as the quintessential example of "selling picks and shovels" during a gold rush. → Read more here.

Content I Like 👀

Jonathan reflects on how AI challenges his identity as a philosopher, moving from initial fear to a rethinking of philosophy itself. He compares the existential dread he felt to Augustine witnessing the fall of Rome, fearing AI’s automation of intellectual work. However, he realizes his threat stems from viewing philosophy as production (publishing, lectures) rather than cultivation (personal growth and virtue). Drawing from Plato, Socrates, and Marcus Aurelius, he emphasizes philosophy as a tool for living a better life, which AI can augment rather than replace. → Watch full video here.

AI Art 👩🎨

Short scene using Google’s new text-to-video model, Veo 2 by Jason Zada.

That’s all for me today. See you next week!

-Manny